What is AI hardware? It would be best to learn the answer as AI technology advances quickly. It shapes everything from smart devices to healthcare breakthroughs. But behind the complex algorithms and smart programs that power AI lies something just as important: AI hardware.

This type of specialized hardware is what makes AI work well by boosting speed and efficiency. While GPUs, TPUs, and NPUs are prominent players, the AI hardware ecosystem is even broader and includes other essential elements such as FPGAs (Field-Programmable Gate Arrays), ASICs (Application-Specific Integrated Circuits), and even quantum computing advancements.

Let’s dive deeper into each component, exploring their unique features, strengths, and best-use cases.

What is AI hardware?

AI hardware is specialized computer equipment designed to handle the heavy computing needs of artificial intelligence (AI) tasks. While regular CPUs (Central Processing Units) can manage many types of computing tasks, they struggle with the intense demands of machine learning (ML) and deep learning (DL). Advanced AI hardware components, however, is built to process the complicated mathematical calculations that AI models require, doing so much faster and more efficiently.

Understanding the answer to What is AI hardware? can unlock insights into how smart devices and algorithms function.

How does AI hardware work?

AI involves working with data structures called matrices and vectors, which represent data and the connections in neural networks. Performing operations on these structures requires fast and parallel processing. CPUs, which are designed for handling tasks one at a time, are not built for this level of work.

To truly understand AI’s impact, one must first ask, What is AI hardware for? AI hardware is designed to:

- Handle many operations at once: It performs many calculations at the same time, which is essential for training complex AI models.

- Access memory quickly: AI tasks need to move lots of data between memory and processing units. AI hardware is optimized to access data rapidly to prevent slowdowns.

- Run specific algorithms: Some AI hardware, like TPUs and ASICs, is specially built to run certain types of AI calculations very efficiently.

AI hardware you should know

The main types of AI hardware include:

- GPUs (Graphics Processing Units): Known for their powerful parallel processing capabilities.

- TPUs (Tensor Processing Units): Custom processors built by Google for deep learning.

- NPUs (Neural Processing Units): Optimized for running AI tasks on edge devices.

- FPGAs (Field-Programmable Gate Arrays): Customizable chips for specific AI tasks.

- ASICs (Application-Specific Integrated Circuits): Chips designed for a single, specialized task.

- Quantum Computing: An emerging technology with potential for complex AI processing in the future.

Each type serves unique functions, catering to different AI needs based on performance, power consumption, and use cases. Let’s explore each of them.

GPUs (Graphics Processing Units)

GPUs, originally developed to enhance graphics in gaming and multimedia, are now a staple in AI computing. Unlike CPUs, which focus on sequential processing, GPUs are optimized for parallel processing, like NVIDIA’s. They can handle thousands of operations simultaneously, making them highly effective for training deep learning models that require vast amounts of data to be processed in parallel.

Strengths:

- Parallel processing power: Capable of handling large-scale matrix multiplications needed for neural networks.

- Versatile application: Can be used for various tasks beyond AI, such as rendering and general data analysis.

- Widespread support: Backed by popular AI frameworks like TensorFlow, PyTorch, and Keras, ensuring seamless integration for developers.

Weaknesses:

- Energy consumption: GPUs require a lot of power, which increases operational costs and limits their use in mobile or low-power environments.

- General-purpose design: While powerful, they aren’t exclusively optimized for deep learning, leading to potential inefficiencies for specialized tasks.

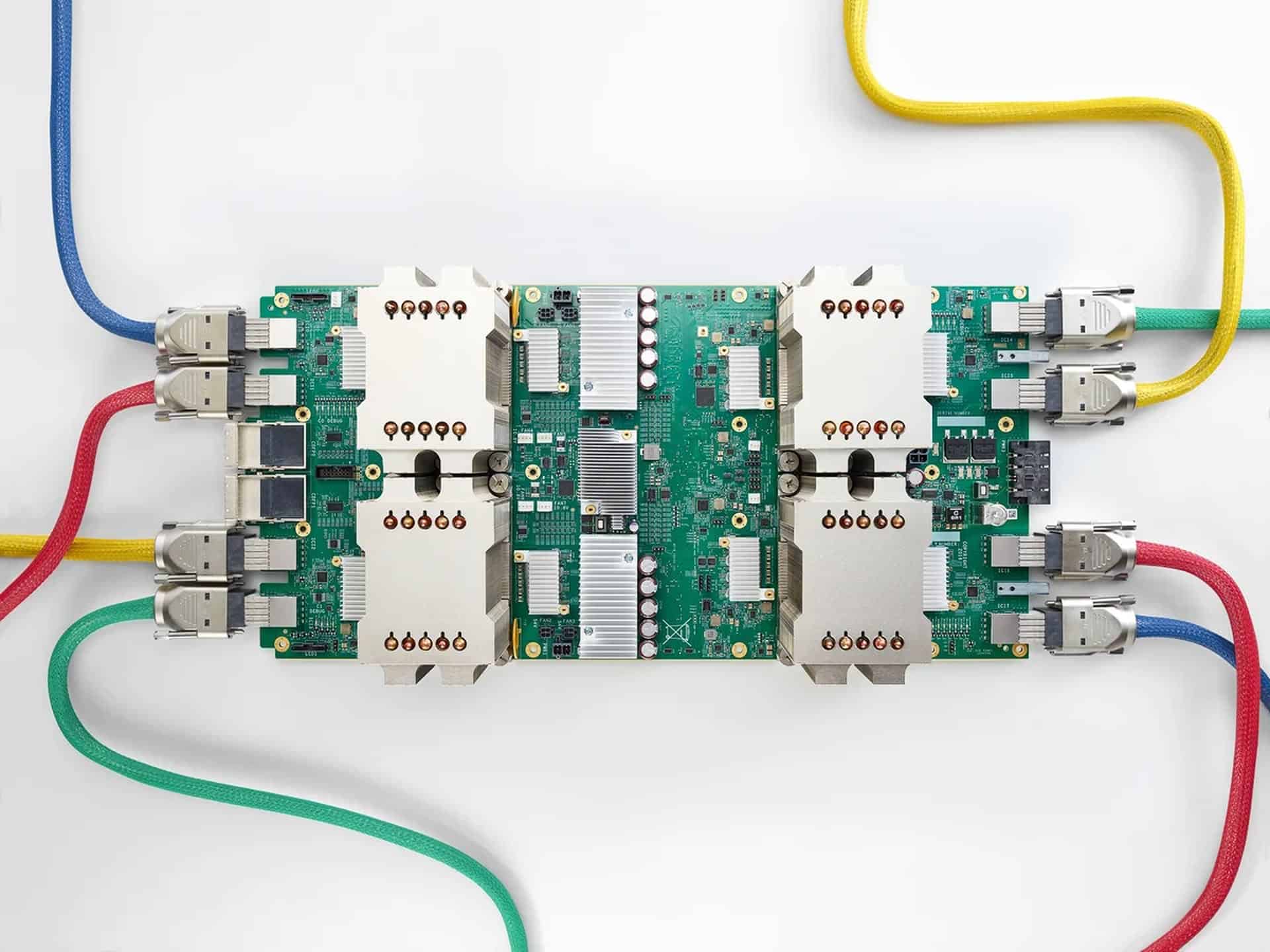

TPUs (Tensor Processing Units)

TPUs are Google’s custom-built processors designed specifically for accelerating deep learning tasks. Unlike GPUs, which are adapted for various uses, TPUs are laser-focused on handling tensor operations, which are the core mathematical processes of deep learning.

Key benefits:

- Faster tensor pperations: Greatly speeds up training and inference times for deep learning models.

- Energy efficiency: Consumes less power compared to GPUs, making them cost-effective for large-scale AI projects.

- Deep integration with TensorFlow: Optimized for Google’s own machine learning framework, enabling high performance for TensorFlow users.

Limitations:

- Limited flexibility: Best suited for tasks involving deep learning and tensor-based computations; not ideal for non-AI tasks.

- Software constraints: Works best with TensorFlow, so developers using other frameworks may face compatibility issues.

NPUs (Neural Processing Units)

NPUs are specialized processors designed to optimize AI inference on edge devices, like smartphones, tablets, and IoT gadgets. Unlike GPUs and TPUs that often handle training, NPUs focus on running pre-trained models efficiently on-device.

Advantages:

- Real-time processing: Allows for AI tasks like image and speech recognition to be performed directly on devices, which improves speed and user experience.

- Power efficiency: Optimized for low power usage, making them ideal for mobile and battery-operated devices.

- Enhanced security: On-device processing means data doesn’t need to be sent to the cloud, reducing security risks and improving privacy.

Drawbacks:

- Training limitations: Not designed for training AI models from scratch; they are mainly focused on running inference.

- Less mature software ecosystem: Compared to GPUs, NPUs have fewer development tools, potentially making software integration more challenging.

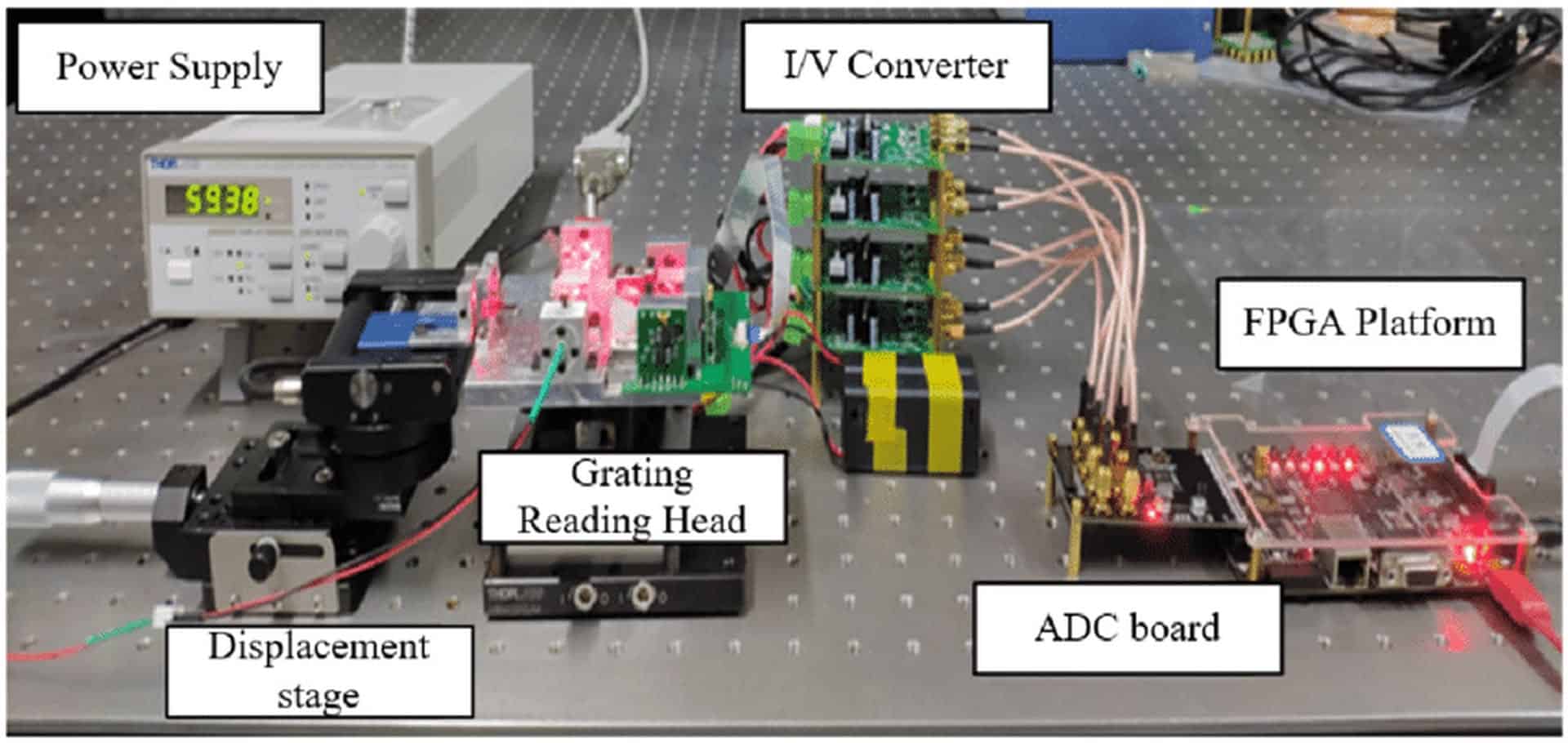

FPGAs (Field-Programmable Gate Arrays)

FPGAs are integrated circuits that can be programmed after manufacturing to perform specific tasks. This flexibility makes them useful for prototyping AI models or building custom hardware solutions that need specific optimizations.

Pros:

- Customizability: Developers can reprogram FPGAs to optimize for different types of ML models, which can be beneficial for evolving projects.

- Energy efficiency: They can be fine-tuned for specific tasks, reducing energy consumption compared to general-purpose hardware.

- Low latency: FPGAs can process data with very low latency, which is crucial for time-sensitive applications.

Cons:

- Complex development: Programming FPGAs requires specialized knowledge, making them harder to work with than more standardized hardware like GPUs.

- Performance vs. cost: While they can offer excellent performance, the cost of development and programming can be high.

ASICs (Application-Specific Integrated Circuits)

ASICs are custom-designed chips built for a single task or application. They are the most optimized form of hardware for specific functions, like cryptocurrency mining or running specialized AI models. One well-known example of an ASIC is the TPU, which itself is a type of ASIC designed for deep learning.

Benefits:

- Ultimate efficiency: ASICs can be fine-tuned for the highest performance and energy efficiency for specific tasks.

- Low power usage: Their single-function design allows them to run at lower power while maintaining high performance.

Challenges:

- Lack of flexibility: Once an ASIC is designed, it can’t be modified for new tasks, unlike FPGAs or GPUs.

- High development costs: Creating an ASIC is expensive and only makes sense for large-scale projects where the investment will be offset by the performance gains.

Comparing the AI hardware: Choosing the right tool for the job

- GPUs: Best for general-purpose deep learning tasks with strong software support. Suitable for research, training, and tasks that require parallel processing.

- TPUs: Ideal for deep learning with TensorFlow, especially for large-scale projects that need high efficiency and low power use.

- NPUs: Perfect for running AI on edge devices where power and real-time processing are essential.

- FPGAs: Great for custom projects that require flexibility and low-latency processing but demand expertise to program.

- ASICs: Excellent for high-volume, specialized tasks where maximum efficiency is required and the budget allows for custom hardware.

- Quantum Computing: Promising for future use in highly complex AI tasks, but not yet practical for most applications

The future frontier: Quantum computing

Quantum computing is still in its early stages but has the potential to revolutionize AI hardware. Quantum computers use quantum bits (qubits) to perform calculations in ways that traditional binary computing cannot. This could allow for much faster processing of complex problems, such as optimizing deep learning algorithms or processing enormous datasets.

Quantum computing holds great promise for AI, as it could solve complex problems exponentially faster than traditional computers and improve the efficiency of neural network training. However, the technology is still in its early stages, facing significant challenges with stability and scaling. Additionally, creating and operating quantum computers is highly complex and costly, limiting their current practical use.

Why does AI Hardware matter?

When discussing the future of technology, a common inquiry is, What is AI hardware? due to its role in advancing AI. AI hardware doesn’t just power data centers—it’s in everyday technology. Your smartphone, smart speakers, and even self-driving cars use AI hardware to quickly process information and make decisions on the spot. This makes applications faster, more energy-efficient, and more secure since data processing can happen directly on the device.

In larger systems like data centers, AI hardware allows for faster training of complex models. This means advancements can be made in fields like medicine, climate research, and robotics more quickly. As AI continues to grow, improvements in AI hardware will be key to making these tools more powerful, cost-effective, and accessible.

By addressing What is AI hardware?, one can appreciate the unique infrastructure that powers complex artificial intelligence operations.

Looking for more? Check out AI guides that can change your perspective for the future!